As Street Fight lead analyst Mike Boland wrote in a white paper this year, visual search is a new discovery paradigm in which consumers use smartphone cameras to find out more about the products right in front of them, marshaling the resources of the Web. Think of a Walmart shopper whipping out her smartphone to research a product on the shelf, expediting a process that would otherwise involve either calling over an employee or searching for the product on Google or Amazon.

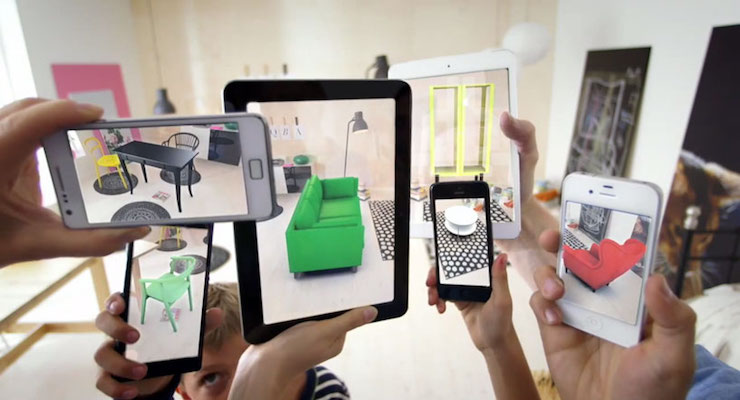

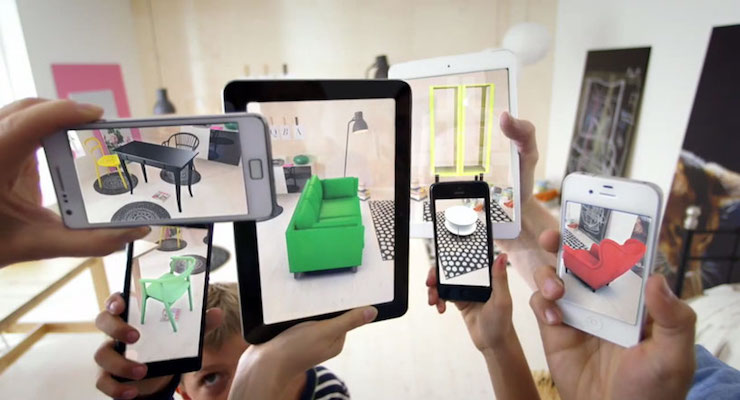

While visual search isn’t exactly catching on like fire yet, its evolution is buttressed by powerful developments of late in the tech industry. Among these: smartphones are increasingly ubiquitous, more efficient, and we’re all more accustomed to using them; investment in AI from both big companies and startups is widespread, making machine vision more effective; and augmented reality (AR), a similar modality in which tech overlays graphics onto images captured via camera lens, is taking off.

Below are a few ways visual search will play out in local and retail in 2019.

Google Glass marked a notorious failure for AR and visual search, but these new media for navigating the world will take off thanks to the 3.2 billion smart devices we carry around in our pockets. Apple and Google have released ARkit and ARCore, respectively, providing a wide swath of mobile app developers the ability to innovate in AR app creation. This brings AR to devices consumers are already using instead of hoping that consumers will carry new hardware on them to access new capabilities.

ARCore and ARkit both function on the basis of SLAM, simultaneous localization and mapping. This is the modality that allows smartphones to get their bearings in relation to the physical world and overlay graphics and other information correctly over material surroundings. Notice that localization is fundamental to what visual innovation in mobile looks like. More specifically for businesses and retailers, visual search, already seeing innovation from the likes of Google, will allow customers to point their smartphone cameras at storefronts in order to obtain information such as hours, menus, and so on, and it will also allow customers in-store to gauge the quality of the products in front of them. As consumers come to expect the efficiency of visual search, brands failing to accommodate that expectation will fall behind the same way businesses poorly adjusted for apps and the mobile web have struggled in the past few years.

This is the reason Snap acquired location-data firms Placed and Zenly. Making visual search and AR work depends on solid location-data infrastructures: knowing where the consumer is and how to make sense of what’s around her. But location data isn’t just the key that makes visual search and AR functional; it’s the capital that visual search and AR will create, offering new opportunities for location-driven attribution. Because every search executed visually fundamentally depends on proximity to a physical object, this medium presents a rich opportunity for measurement, allowing ad tech firms and brands to gauge whether their ads have truly driven a consumer to a physical location.

This is only the beginning of Street Fight’s coverage of visual search and AR in 2019. Hit me up at jzappa@streetfightmag.com with your thoughts, and stay tuned for a slew of updates in the weeks to come.

Joe Zappa is Street Fight’s managing editor.