Snap wants to light up shopping on Snapchat, especially for fashion, and it just took a big step forward.

The social media platform kicked off its Partner Summit on Thursday by dropping a load of announcements covering shopping features — online and in-store — new ways to use the Snapchat camera and a variety of partnership news with Prada, Farfetch, Poshmark, American Eagle and others.

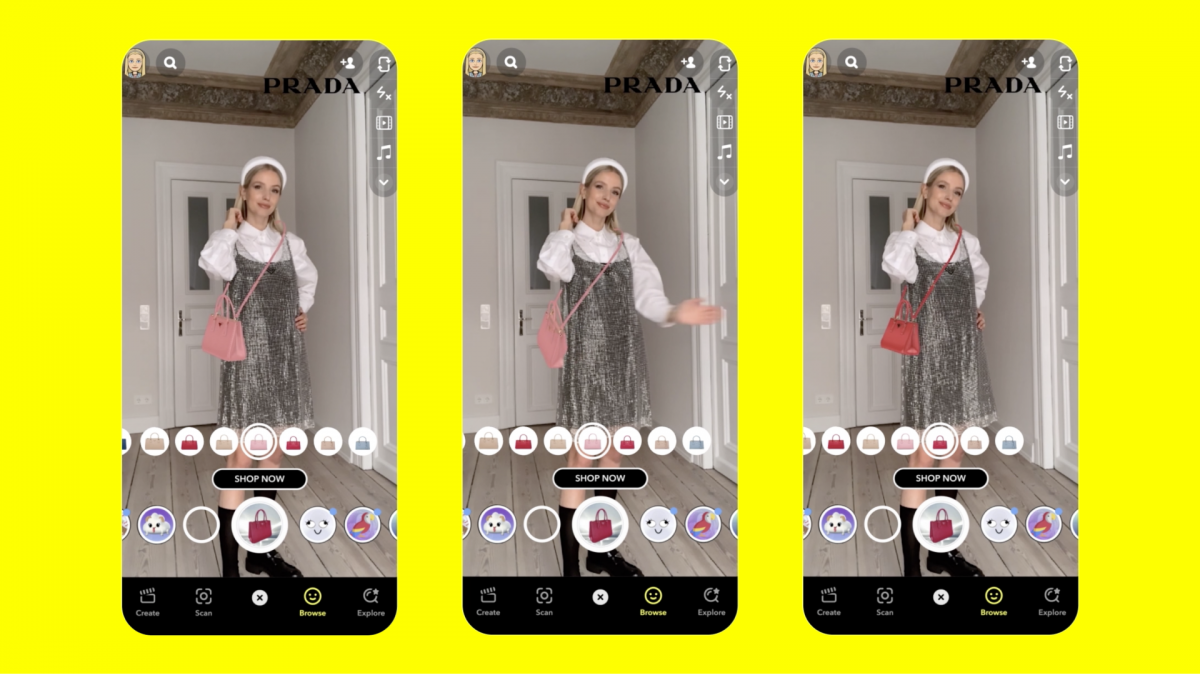

One of the most notable reveals amid the sea of noteworthy debuts is augmented reality try-ons for fashion, which the company is launching with Prada and Farfetch.

The latter will allow Virgil Abloh fans to virtually try Off-White’s Melange Tomboy Jacket or Maize & Camou Windbreakers, while Prada devotees can pretend they’re Hunter Schafer and digitally don one of its marquee Galleria bags.

Even better, Snapchat users won’t have to fumble with their devices to switch colors or styles.

Thanks to natural language processing, the Farfetch integration comes with 40 built-in voice commands, such as “Can you show me a windbreaker jacket?“ or “Maybe something with a pattern?”

From there, users can continue trying on items, share it with followers or jump right to purchasing.

Prada’s AR experience brings gesture control to the equation. Now people can go from a magenta Galleria to an emerald by just waving a hand in the air.

The intuitive nature of such human-to-computer interactions, along with advances in mobile camera technology, amplifies the sense of magic that’s crucial to fashion, in general, and luxury brands, in particular.

For Farfetch, that lines up with its own path forward and vision.

“We’ve been very focused on Farfetch’s Luxury New Retail strategy,” explained Gareth Jones, chief marketing officer. “Jose, our chief executive officer, always says that the key question we think about every day is ‘How will people shop in five to 10 years’ time?’ This is what we are thinking about — we see Luxury New Retail as our strategy to drive how luxury retailing will look both now, but in the future.”

For the luxury fashion retailer, the camera is one of the keys to this journey. “What Snap provides with its camera strategy is an amazing pathway to bring fashion to the end user, which is incredibly exciting,” he added. “Snap’s technology is helping us to bring the immersive experience of fashion to customers in a really compelling way, which hasn’t really been done before.”

According to Snap, its work to make AR fashion a reality has been a long time in the making.

Where sectors like beauty, footwear and furniture have nailed virtual try-ons for fun or shopping, fashion has struggled. Turns out, mapping virtual clothes to a human form in any believable or realistic way is incredibly complicated.

The tech has to understand how fabrics move, the effect of gravity and how a piece of clothing would lay on different body shapes, among many variables, Carolina Arguelles, Snap’s global product lead for AR, explained in an interview.

“It’s very difficult to try on apparel in AR today. It’s really hard for that to be realistic and adapt to your unique body size and style and fit, and for that fabric to be represented naturally,” she said. “It should move how it’s supposed to move because of things like gravity — those are things that have been very difficult to solve from a technological perspective.”

But there’s plenty of reason for Snap to tackle the challenge. More than 200 million people use its AR features and nearly one-third of total spend among teenagers is on apparel and accessories, the company said, prompting Snap to dig down and try to crack the problem.

The tech company leaned on machine learning for the two key developments making AR try-ons for clothing possible in Snapchat: Cloth simulation and 3D body mesh.

Its machine learning/AI teams have been working on some of these cutting-edge developments for years, said Arguelles. Earlier stages of 3D body mesh development began with fun AR lenses, but that led to a deeper, more detailed understanding of things like where joints are on the body and the width, depth and dimension of the full body.

The tech applies to the AR lens, but also fuels the gesture control feature since it understands how the body moves.

“Those are coupled with our cloth simulation ML, which is really a trained model to understand if fabrics should move in these directions, based on the environment, based on gravity, based on the movement of the user,” the AR chief continued. “It’s those two things that are really enabling try-on to start to feel a lot more natural.”

However, Arguelles noted that the work is ongoing, framing these advances as more of a step toward fully fleshed out fashion AR, complete with features like remote fitting. That’s an area of intense interest for Snap, at least in the future, and the company’s recent acquisition of Fit Analytics will play a major role in that.

For now, the company is more focused on its current spate of announcements — among them, a new scanning feature for clothing.

More than 170 million Snapchatters scan the world around them monthly, according to the company’s data, and they’ve already identified millions of objects, from wine bottles to dog breeds, plants and many more objects. Now apparel joins the fray with Screenshop.

Screenshop is exactly what it sounds like: IOS users in the U.S. will be the first to be able to scan friends’ physical outfits in real life, so the Screenshop’s catalogue can pull similar looks and relevant recommendations from hundreds of brands. Eventually, the tool will let people dig up camera roll screenshots taken in the past, so those pics will do more than just clutter up their photo storage.

The company is also focused on making shopping in the Snapchat universe more social.

Social shopping is Poshmark’s specialty. So perhaps it’s no surprise that the peer-to-peer resale marketplace, which was the first retail partner to adopt Snap Kit in 2018, is joining forces with Snap again on Poshmark Minis.

Minis are simplified versions of apps available in Snap’s chat section, and Poshmark’s version allows Snapchat users to join Posh Parties. The real-time virtual shopping events — where people buy and sell products, or shop with friends — are a fundamental part of the Poshmark experience. Now Snapchatters can get in on the action.

“One of the things that we’ve worked with on Snapchat in the past is giving them a feed of our products. But usually those feed items get surfaced based on your actions, or any interests. So it’s based on users doing something and we then surface [those] specific items from our catalogue,” Poshmark chief marketing officer Steven Tristan Young told WWD.

Now, after three to four months of development on Poshmark Mini, the marketplace is expanding its “party architecture” to extend social shopping outside the bounds of its own app.

“It’s sort of like creating the Poshmark experience within the Snapchat ecosystem,” he explained.

Indeed, it may pull in a universe of Snapchatters, which numbers more than 500 million monthly active users now. Snap newly reports that it captures nearly one of every two smartphone users in the U.S. and overseas, roughly 40 percent of its community now comes from outside North America and Europe. In India, for instance, it’s grown daily active users more than 100 percent year-over-year in each of the last five quarters.

That is a massive potential audience that could enter the Poshmark fold, whether they’re existing customers or not. Snap also shares aggregated reports to developers and partners like Poshmark about Mini usage metrics, like how much time people spend in the experience, so it has eyes on a possibly huge fountain of data as well.

That’s not the only social shopping experience for Snapchat. The platform also wants to make the social shopping environment look more lifelike, thanks to another partnership with American Eagle.

Snap’s Connected Lenses lets users invite friends into a shared Lens experience that takes them to a virtual — but realistic — AE store location, so pals can live chat, pick or compare outfits and build looks together on a virtual mannequin.

Considering shopping has always been a social activity in the real world, it’s taken a ridiculously long time for it to really land in e-commerce, especially for a social media app that’s hyper-focused on online shopping.

“The reason why it took us so long was because we wanted to foundationally make sure that our app retains that important value of close friends, and friendships,” Snap’s Arguelles said. “And then simultaneously, we knew that if we were going to insert brands, we needed to build the right platform to do it, we needed to do it in a way that was going to give them value back that was scalable. And that was something we knew we could grow over time.”

It’s been a long journey to get here, going from cartoonish sunglasses and sombreros to a burgeoning array of realistic virtual try-on and shopping experiences. But it’s clear now that each step was a building block for Snap.

In other news, the company revealed AR partnerships with Swiss luxury watchmaker and jeweler Piaget and online eyeglass company Zenni, as well as new tech features like wrist-tracking technology for AR watch and jewelry try-ons.

Further, the introduction of API-enabled Lenses allows businesses “to tap into dynamic, automatic ways to feature real-time content in AR. Through Snap’s partnership with Perfect Corp., the Estée Lauder Cos. Inc. will be among the first to leverage Snap’s AR Shopping platform tools,” the company said. “By integrating their product catalogue through Snap’s API in Business Manager, brands like MAC Cosmetics can build lenses from new AR Shopping templates and publish them to Snapchat, based on real-time product inventory.”

With these Dynamic Shopping Lenses, consumers will be able to browse up-to-date inventory, virtually try on and make product purchases, without leaving the app.

Public profiles for businesses are another feature coming to the app, allowing any partner to set up a permanent residence on Snapchat and showcase AR Lenses and Stories, plus a new “Shop” page. Snap has been beta-testing this since July 2020, and plans to eventually allow public profiles to integrate with more parts of the platform.

Of course, since the event is as much a developer conference as a partner summit, it also goes into deeper granular matters like its “Inclusive Camera,” for which Snap worked with directors of photography from the film industry to learn techniques that best capture darker skin tones.